About

Publications

(* indicates equal contribution)

D3Fields: Dynamic 3D Descriptor Fields for Zero-Shot Generalizable Rearrangement

[Project Page] [Paper] [Code]

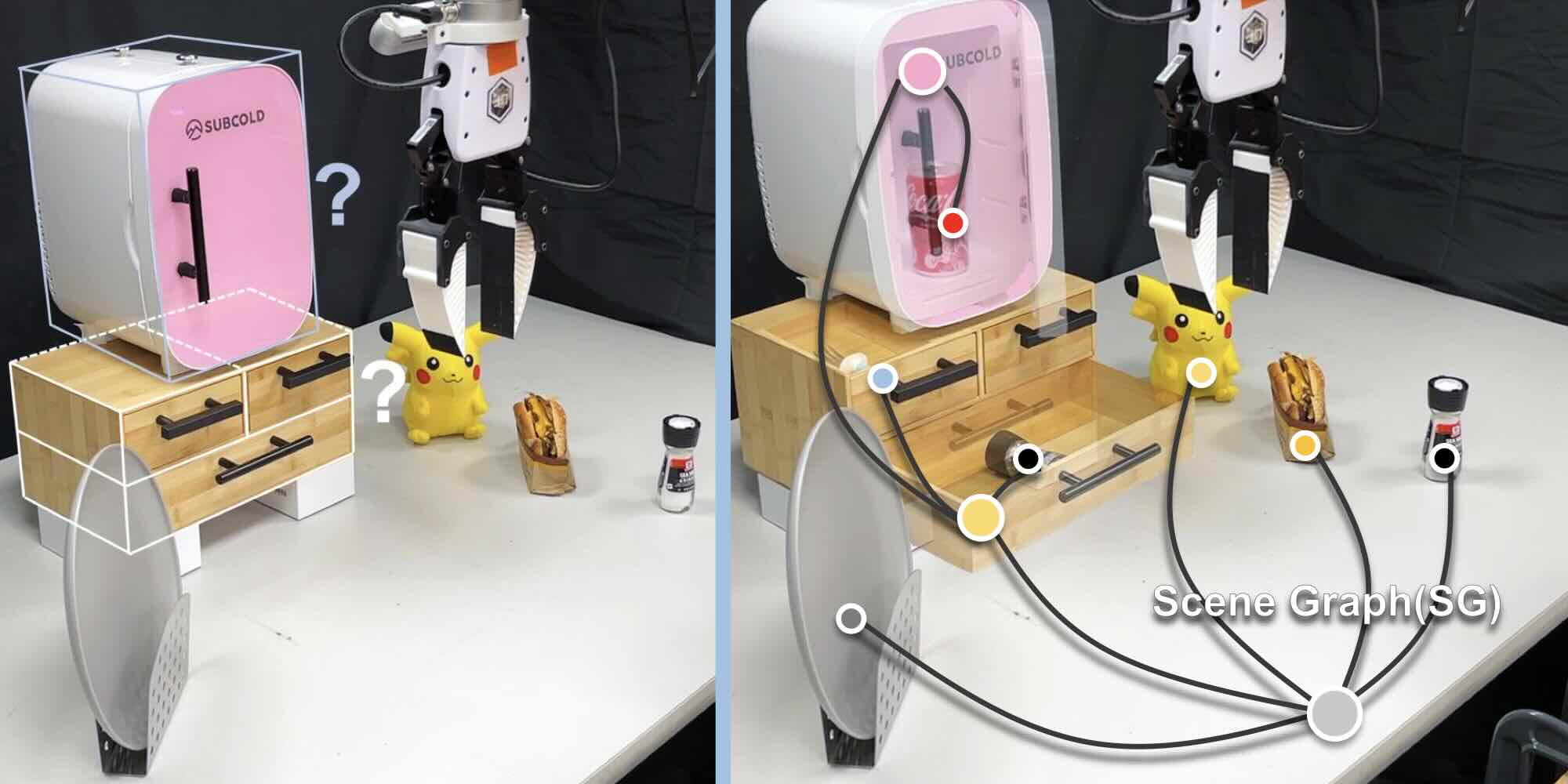

RoboEXP: Action-Conditioned Scene Graph via Interactive Exploration for Robotic Manipulation

[Project Page] [Paper] [Code]

Selected Honors & Awards

- Dean's List, NUS

- Certificate of Oustanding Performance & Top Students in CS4243 Computer Vision and Pattern Recognition (graduate level course), NUS

- Certificate of Best Project Award in CS4246/CS5446 AI Planning and Decision Making (graduate level course), NUS

- The Science & Technology Undergraduate Scholarship (an undergraduate full scholarship offered to support outstanding students), NUS & Singapore Ministry of Education

Experiences

Research Assistant @ Stanford & NUS

Advisor: Prof. Jiajun Wu and Prof. David Hsu

Working on automatically learning accurate planning abstractions—state abstractions (predicates) and action abstractions (skills)—from everyday human videos for robot planning, using neural-symbolic methods and foundation models. Developing novel task planners to reason and plan with these learned abstractions more effectively.

Working on automatically learning accurate planning abstractions—state abstractions (predicates) and action abstractions (skills)—from everyday human videos for robot planning, using neural-symbolic methods and foundation models. Developing novel task planners to reason and plan with these learned abstractions more effectively.

Undergraduate Research Assistant @ NUS

Group of Learning and Optimization Working in AI (GLOW.AI)

Advisor: Prof. Bryan Low

Worked on my undergraduate honors thesis about leveraging large multimodal models (LMMs) for decision making and planning under uncertainty, with applications in autonomous driving and robotics.

Advisor: Prof. Bryan Low

Worked on my undergraduate honors thesis about leveraging large multimodal models (LMMs) for decision making and planning under uncertainty, with applications in autonomous driving and robotics.

Undergraduate Research Assistant @ UIUC

Robotic Perception, Interaction, and Learning Lab (RoboPIL)

Advisor: Prof. Yunzhu Li

Worked on action-conditioned scene graph building via interactive exploration for robotic manipulation, incorporating the large multimodal model (LMM).

Advisor: Prof. Yunzhu Li

Worked on action-conditioned scene graph building via interactive exploration for robotic manipulation, incorporating the large multimodal model (LMM).

Undergraduate Visiting Research Intern @ Stanford

Stanford Vision and Learning Lab (SVL)

Advisor: Prof. Jiajun Wu and Prof. Yunzhu Li

Worked on designing an ideal scene representation for embodied AI by leveraging foundation models, which is 3D, dynamic, and semantic.

Advisor: Prof. Jiajun Wu and Prof. Yunzhu Li

Worked on designing an ideal scene representation for embodied AI by leveraging foundation models, which is 3D, dynamic, and semantic.

Undergraduate Research Assistant @ NUS

Adaptive Computing Lab (AdaComp)

Advisor: Prof. David Hsu

Worked on designing the control and system architecture for autonomous long-horizon visual navigation.

Advisor: Prof. David Hsu

Worked on designing the control and system architecture for autonomous long-horizon visual navigation.

Academic Service

Teaching Assistant- CS3244: Machine Learning, Fall 2021, NUS

- CS2040: Data Structures and Algorithms, Fall 2020, NUS

Top